what causes your brain to interpret complex sentences differently

Words, like people, can achieve a lot more when they work together than when they stand on their own. Words working together brand sentences, and sentences can limited meanings that are unboundedly rich. How the human encephalon represents the meanings of sentences has been an unsolved problem in neuroscience, just my colleagues and I recently published piece of work in the periodical Cognitive Cortexthat casts some calorie-free on the question. Here, my aim is to requite a bigger-picture overview of what that work was nearly, and what information technology told the states that we did non know before.

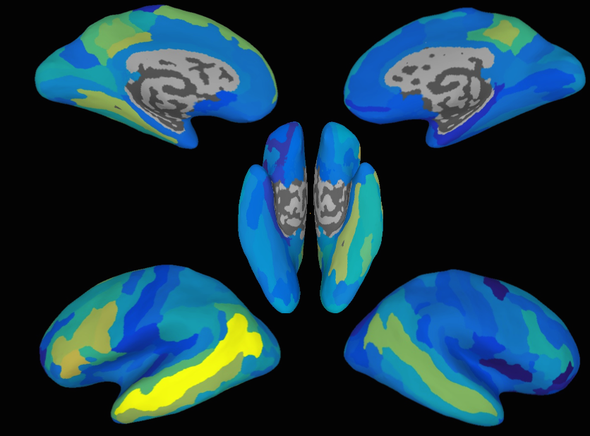

To measure people's brain activation, we used fMRI (functional Magnetic Resonance Imaging). When fMRI studies were first carried out, in the early 1990s, they mostly only asked which parts of the encephalon "light up," i.e. which brain areas are active when people perform a given task.

However, in the last decade or and then, a unlike arroyo has been speedily gaining in popularity and influence: instead of just asking which areas light up, neuroscientists attempt to practice what is known as "neural decoding": we observe a blueprint of encephalon activation, and then try to figure out what gave rise to information technology. Equally an illustration, consider walking through a wood and seeing an animal footprint in the mud. By looking at the pattern in the mud, i.e. the shape of the footprint, we might exist able to figure out which animate being made it. Merely in society to practise that, we offset need to learn what the footprints of different animals tend to look similar, and, harder still, learn how to decode these footprints even when the mud is smudged or the imprint is faint.

Neural decoding is very like. Instead of deducing which beast gave rise to a design in the mud, which draws on what 1 might know virtually the footprints of familiar animals, nosotros instead decode what stimuli (words and sentences, in this case) might have given rise to a given brain pattern, based on the patterns we've seen in the past from known words and sentences.

The new aspect of our study was that neural decoding had not previously been achieved at the level of unabridged sentences. To give a rough thought of why sentence-level decoding is hard, let us return to the animal footprint analogy. Suppose the person within the MRI scanner were just reading a single word at a time. This would be similar seeing just one footprint in the mud, and trying to effigy out which fauna it came from. In dissimilarity, when the person in the scanner is reading an unabridged judgement, brain activation patterns from several words are present at the same time. Decoding that is similar if several different species of animal all ran over the aforementioned piece of wet mud together, and and so our chore was to try to place equally many of those animals every bit possible from the compound mass of tracks.

However, our report also went beyond that. We built a calculator model that didn't simply learn the "neural footprints" of specific words. The model besides used information about different sensory, emotional, social and other aspects of the words, so that it could larn to predict encephalon patterns for new words, and as well for new sentences fabricated out of recombinations of the words. Extending our animal footprint metaphor, this would be as if we were trained to recognize the footprints of a deer and of a cow, and and so we get confronted for the first time with a footprint that nosotros have never seen earlier, e.g. that of a moose. If we have a model that tells us that a moose is a bit like a cow-sized deer, then that model can predict that a moose footprint volition be a scrap like a cow-footprint-sized deer-print. That prediction isn't exactly right, but information technology isn't far off either. It's expert plenty to practise a lot better than a random guess.

Forth similar lines, our computer model could predict the brain patterns for a new sentence that it had not been trained on, as long equally it had been trained on enough of the words in that sentence in different contexts. For case, our model could predict the brain blueprint for "The family played at the embankment," using the patterns that it had been trained on for other sentences sharing some of the aforementioned words, such every bit "The young girl played soccer" and "The beach was empty."

This process of using a figurer model to extract information from brain data is, in many ways, the same as other types of technology that are condign woven into our everyday lives. Computer models which extract meaningful information from large patterns of information are adult in the field of research known as "machine learning", also oftentimes referred to as "information science." When you signal your phone camera at someone and it draws a box around their face, the phone is taking in lots of information—millions of pixels—and extracting the meaningful information of where the face is.

Voice-recognition software such as Siri takes in lots of data about rapidly irresolute air vibrations (voice communication sounds) and extracts words from them. Neural decoding takes in brain data in the course of 3-dimensional pixels that depict brain activation on fMRI scans, (chosen "voxels") and extracts information from them. In our study, that information consisted of the meanings of words and sentences, which people were reading while their brains were existence scanned.

To decode information almost sets of words, nosotros needed an interdisciplinary team of people. The report was led by Andy Anderson, a postdoctoral inquiry swain in my lab. Andy has expertise that spans all the required domains: computational models of the meanings of words, automobile learning, and brain imaging. Some other central member of the squad was Dr. Jeffrey Binder of the Medical College of Wisconsin a neurologist and earth-renowned investigator of how the brain represents significant.

But the total team was much larger than that: our newspaper has nine authors, all of whom played different and crucial roles, and the authors span six different nationalities, from both industry and academia. Our funding came in function from two different government agencies (the Intelligence Advanced Research Projects Activeness and the National Scientific discipline Foundation). Scientific advances these days often get made by large collaborative teams, made up of people from many different countries of origin.

Decoding sentences from the encephalon may well be intriguing, merely why does it thing? At that place are 2 answers to this question. One reply is that the human being brain literally makes us who we are, and language is one of the most fundamental aspects of human cognition.

Beyond the intrinsic scientific interest, such work may likewise one day have practical applications. Our study extracts meaning from people's brains, and there are many people with traumatic encephalon injuries who have significant in trapped in their heads that they are unable to express themselves verbally, east.m. patients with harm to a brain region called Broca'due south area .

Our study besides used calculator models to represent significant. Existing estimator models work much improve than they did just a few years agone, as can exist seen from the success of systems such every bit Siri and Google Interpret. But these existing models also have many imperfections, as can as well been seen from those aforementioned computer systems, which all as well often produce garbled output. Past far the best representer of meaning in the world is the human encephalon. In seeking to understand how the brain achieves that, we might be able to make our computer models of meaning work meliorate.

These practical pay-offs won't come tomorrow, or even next year. The question of how the brain represents meaning is extremely difficult, and our new paper, although an advance, leaves many issues notwithstanding unaddressed. To tackle difficult problems, scientific discipline needs to work with a long time horizon. Just every bit the words in a sentence work together to build a richer meaning, many individual scientific studies jointly help the states improve to understand our earth.

The views expressed are those of the writer(southward) and are not necessarily those of Scientific American.

Source: https://blogs.scientificamerican.com/guest-blog/how-the-brain-decodes-sentences/

0 Response to "what causes your brain to interpret complex sentences differently"

Postar um comentário